Raft!

4/21/2022

I first encountered the Raft consensus algorithm in 2017, at a Papers We Love - San Diego presentation by Chris Hiestand on the paper.

In the years following the presentation, I’d found myself returning to the paper because it intrigued me and I kept seeing Raft referenced in other projects. Then, in 2021, I took my printed copy of the Raft paper with its highlighted chunks and marginalia to David Beazley’s class on building Raft.

I’d been compelled to take Beazley’s class because the problem of Raft just continued to stick around in my brain (and fortunately my employer offered to send me!). For the class, we implemented Raft in whatever language we preferred after first solving some smaller distributed systems problems (a traffic light system and one other one which I vaguely remember may have been a key-value store…).

Let me be the first tell you: building Raft in a week based on the paper is difficult. Still, by the end of the week I had a basic repository in Python with a pretty basic implementation using threads, clocks, and queues. There was no persistence or snapshotting in my implementation, but the core components were present and it was possible to imagine how to extend my basic project to gain persistence (writing to and reading from disk, for instance).

Why This Problem

After the class I found that I wanted to keep hacking on it, to keep chewing on the problem, and I ended up hacking on my Raft implementation for about half a year more. First I added an async runtime, thinking that maybe some of my Raft difficulties were actually difficulties managing threads-and-queues. My async version did seem simpler. Outside of my Python implementation, I even toyed with a side project in Haskell to see if I could use compile-time guarantees to make a Raft that was easier for me to reason about. Dear reader, I even came pretty close to using GADTS in my Haskell implementation, but I talked myself off the hobby-software-project ledge there.

Eventually, work on my Raft implementations dried up in a manner akin to what always happens with my open source toys: I moved on to some new shiny thing. But Raft continues to live in my brain rent-free! And I’m not alone: just look at all of these projects based on Raft:

I don’t know what it is about Raft that keeps drawing me back, but I think it’s a good example of the core attribute of distributed systems that I find compelling: these problems seem like they should be solvable but they quickly overrun the capacity of the human mind to hold all the aspects of the problem in one’s head at the same time.

In other words, these problems seem straightforward but actually implementing them is bedeviling. You start your software-implementation truck and then discover you’ve run right into an invisible brick wall made out of time and failing networks and no guarentees about anything.

Raft Aspects: Leader Election and Storage Consistency

As I recall, there are the two primary components of Raft and in hindsight it’s surprising what seemed hard contrasted with what I found to be actually hard:

- Leadership Election

- Storage Consistency

Surprsingly Not the Hardest Part: Leader Election

As a consensus algorithm, the problem Raft solves is achieving consistent data between different nodes in a distributed system. To achieve consensus, Raft relies on a single leader node (the Raft paper calls nodes “Servers”), and this leader will determine which data has been successfully added to the cluster.

In order to reliably have a single leader in the cluster, Raft has a novel solution for electing leaders and when I read the Raft paper and attended the presentation, I was most taken in by this aspect: Raft’s leader election sounds creative, interesting, and potentially tricky to work through. I’ll try to give a brief description of it below.

First, Raft has three different types of participants in a cluster: Leaders, Candidates, and Followers. Followers receive and process messages from Leaders, but there will be only one Leader at a time. Each message has something like a version number, which is called the “term”, which only gets incremented when there is a leader election in the cluster. If any node thinks it’s a Leader but it receives a later term message than it knows about, it immediately converts itself into a Follower and starts listening to the Leader that sent the message.

Further, Leaders will send out empty messages as a kind of heartbeat in order to maintain their leadership. For a Follower, receiving one of these messages acts as confirmation that the Leader is still alive and still processing messages. What do these nodes do when the Leader goes quiet?

That situation is covered by Candidates. Each node has an internal clock and an attribute called the “election timeout” which is a value randomly chosen from a starting configuration. If no messages have been received in this randomly selected interval, then the Follower declares itself a Candidate and emits request-for-vote messages to all other nodes in the cluster. Further if there are multiple Candidates simultaneously asking for votes, and their election timeout goes off again, they will start a new election.

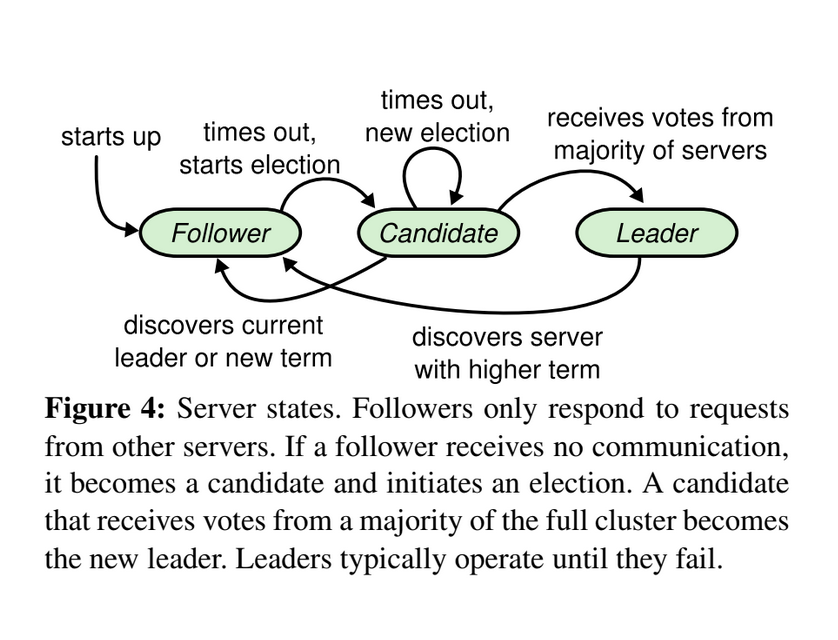

The paper shows the states and state transitions a node can see in a useful diagram:

There’s also a great visualization of the leader election process over on raft.github.io that does it much greater justice than I can in my basic description here and I encourage anyone following along to check that out.

In truth, I’m glossing over a bit here, in order to get to this point: when I read the paper and thought most about building Raft, I thought leadership election would the most dense and error-prone of my implementation, and I was surprised when it wasn’t. The reason it wasn’t is that the state transitions between node types and the behaviors of each node type are well-defined and thus straightforward to encode in a program:

- A Follower updates its state upon receiving messages from a Leader

- A Leader sends out messages (and heartbeats)

- If a Follower does not hear from a Leader in a period of time (randomly selected from “election timeout” for the cluster), then

- It converts itself to a Candidate and issues “request for vote” RPC messages.

In short, the following transitions are allowed:

- Follower -> Candidate

- Candidate -> Follower

- Candidate -> Candidate (if no Leader elected in last election)

- Candidate -> Leader

- Leader -> Follower

And that’s it!

Note: I don’t mean to imply here that implementing Raft’s leader election algorithm is easy and there are certainly some tricky details to getting this right, which is why, for example, etcd, a raft-based database, has a non-voting “Learner” role in their version of Raft.

Probably the Hardest Part: Storage Consistency

The hardest part for me in implementing the Raft paper was in trying to actually pin down the consistent data parts. For example, there’s an “append entries” RPC described in the Raft paper and when a Raft “server” accepts one of these messages, it must perform some logic to check whether it should process the message (based on the message term) and then it will update its internal state and potentially advance its “commit index”.

Here’s my Follower class with its handle_append_entries_message method:

def handle_append_entries_message(self, event: Event) -> ResponsesEvents:

# An RPC sent by leader to replicate log entries (see Raft §5.3)

# If entries is an empty list, this is meant to be a heartbeat (see Raft §5.2).

logger.info(

f"{self._log_name} Received new append entries request with {len(event.msg.entries)} entries"

)

success = self.log.append_entries( # type: ignore

prev_term=event.msg.prev_log_term,

prev_index=event.msg.prev_log_index,

entries=event.msg.entries,

)

self.voted_for = None # This looks like a great place for bugs

if success:

# We have a leader if we have received this event

self.known_leader_node_id = event.msg.leader_id # type: ignore

# Leader sends commit index: try to advance ours

new_commit_index = min(event.msg.leader_commit_index, len(self.log))

if new_commit_index > self.commit_index:

self.commit_index = new_commit_index

logger.info(

f"{self._log_name} Committed entries count is now {self.commit_index}"

)

if self.commit_index > self.last_applied:

entries = self.log.log[

self.last_applied + 1 : self.commit_index + 1

]

self.applied.extend(entries)

logger.info(f"{self._log_name} AppliedEntries={entries}")

self.last_applied = self.commit_indexWe have a lot of state to keep consistent in our minds to be able to understand this code:

- a “commit index”

- a “last applied index”

- the “log” (the real data store Raft is concerned with)

- the entries from our “log” that have been “applied”

The shortest explanation for what this code is attempting to do (and I offer it more as an example of an attempt than as a fully-thought-out solution to Raft) is as follows:

- The Raft node needs to make sure it has all the log entries the Leader knows about

- The Raft node must also know what entry is the latest entry “committed” to the cluster.

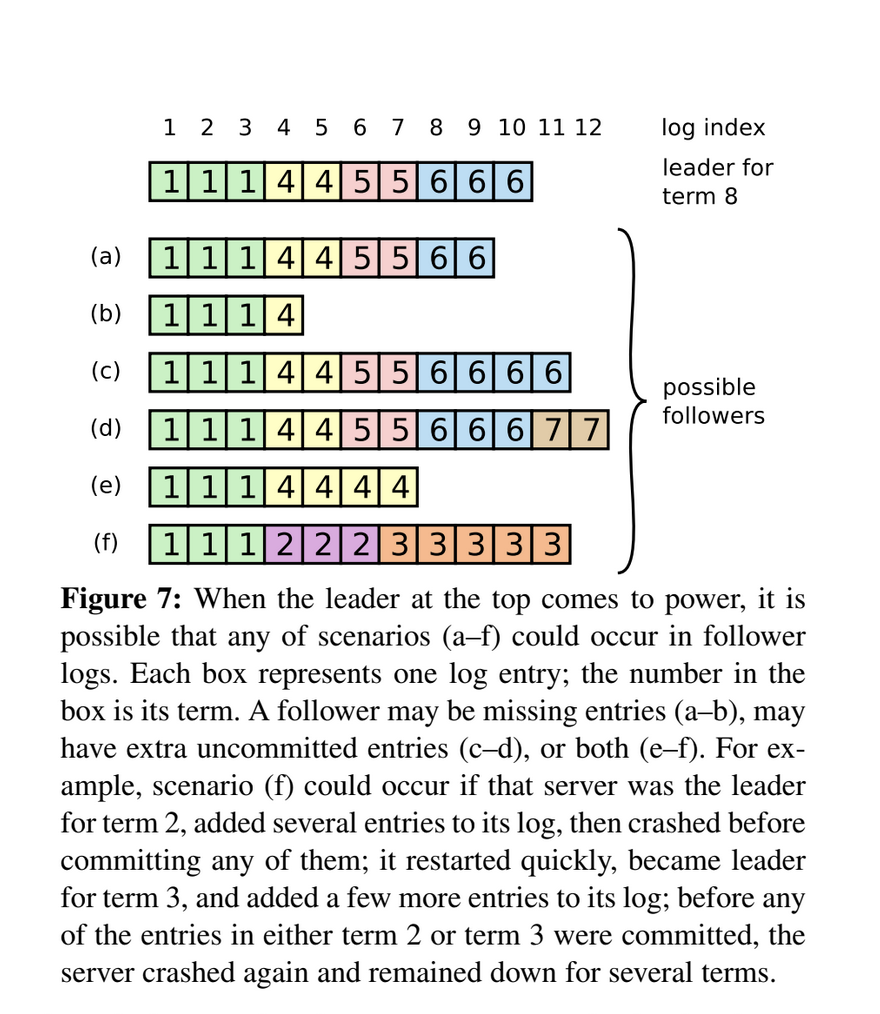

Now, this may sound straightforward, but the key thing about distributed systems is that they can fail in interesting ways. Consider the following diagram from the Raft paper on scenarios a newly elected leader may encounter:

The goal for Raft is consistency and it works to achieve this goal even though servers may go offline. These different failure modes are a great example of trying to keep a lot of information straight in our heads when we’re working on distributed systems.

The Devil Is in the Details

Building a software project based on a paper can sometimes feel like a “Draw the rest of the fucking owl!” exercise.

My goal in commenting on Raft here is not to present my half-working hobby projects as complete implementations. Instead, I’m interested in the ways that problems like Raft are alluring: they look solvable, but they’re pretty hard nuts to crack. They can fail in such interesting ways and anticipating those failures and preventing them or working around them can be a hugely frustrating exercise.

In other words, projects like Raft are attractive mountains to climb and to keep climbing even after you’ve summitted: they never stop being hard and thus they never stop being compelling. They’re always there.

Tags: Raft,Distributed Systems